Cerebras Techniques has introduced its 2nd Technology Wafer Scale Engine processor in what appears to be a significant breakthrough for the corporate.

Introduced on the Scorching Chips 2020 convention, the brand new chip — which is as giant as a single 300-mm wafer — packs over two instances extra transistors in addition to a whopping variety of AI-optimized cores.

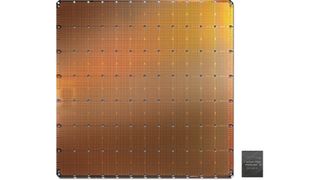

The Cerebras Wafer Scale Engine Technology 2 has a die dimension of roughly 46,225 mm2 as it’s restricted by physical dimension of a 300-mm silicon wafer. The WSE 2 is processed utilizing TSMC’s 7nm fabrication expertise and consists of two.6 million of transistors. To place the numbers into context, NVIDIA’s A100 measures 826mm2 and incorporates 54.2 billion transistors.

Incredible complexity

The Wafer Scale Engine 2 packs as much as 850,000 proprietary cores particularly designed for processing AI/DL/ML functions. Every core has its personal SRAM (i.e., there are tens of gigabytes of SRAM in each WSE) and all of them are interconnected utilizing a particular 3D mesh community that allows all 850,000 cores to work on the identical job.

Cerebras claims that its Wafer Scale Engines are designed for workloads that might not carry out ok on conventional supercomputers using hundreds of CPUs/GPUs/TPUs. In the meantime, Wafer Scale Engines can be utilized with current AI frameworks, equivalent to TensorFlow or PyTorch, albeit with a particular compiler from Cerebras.

Cerebras says that it has already acquired its 2nd Technology Wafer Scale Engine from the fab and is presently testing it internally. There is no phrase on precise efficiency and even power consumption of the WSE 2, however the 1st Technology WSE consumes as much as 20kW of power and is provided as a 15U rack system.